Introduction

Picture this: It's a seemingly ordinary morning, and I'm jolted awake by the realization that my partner is still in bed. Panic mode engaged! "Babe, it's 7:30! You're super late for work!" I exclaim, my voice trembling with the fear of impending doom. Her response? A sleepy smirk and a mumbled reminder that it's Labor Day. Oh, the irony of panicking about work on a day dedicated to... not working.

Now, as any self-respecting insomniac knows, once you're up, you're up. Sleep becomes as elusive as a bug-free code. So, what does a wide-awake developer to do? Naturally, I decided to indulge in every software engineer's secret guilty pleasure: watching tech talks in pajamas.

Enter stage left: "Safe Network API Migration Tactics" by Chris Francis & Ashok Varma from Uber, straight from Droidcon SF.

As I dove into the talk, a lightbulb moment struck. During the introduction I also remembered my long-neglected blog. Two birds, one stone? Challenge accepted! I decided to transform my early morning misadventure into a blog post.

The talk turned out to be a gem - relatable, well-structured, and crystal clear. Who knew API migration could be this engaging?

Setting The Stage: The Network Stack Evolution

In the ever-evolving landscape of mobile app development, the choice between Traditional Network API stacks and gRPC can significantly impact performance and user experience. While traditional RESTful APIs have long been the go-to solution, gRPC is gaining traction for its efficiency and robust feature set. The key difference lies in how these stacks handle data serialization, transport protocols, and client-server interactions. Let's break down the main distinctions:

| Aspect | Traditional API Stack | gRPC Stack |

|---|---|---|

| Data Format | JSON (human-readable but verbose) | Protocol Buffers (compact binary format) |

| Transport Protocol | HTTP/1.1 or HTTP/2 | Built on HTTP/2 (supports multiplexing, bi-directional streaming) |

| API Contract | OpenAPI (Swagger) for documentation | Protocol Buffer service definitions (enables automatic code generation) |

| Performance | Generally slower due to larger payloads and text-based parsing | Faster, with reduced network usage and more efficient parsing |

| Mobile-specific Considerations | Well-supported across platforms, easier to debug | Better performance on limited mobile networks, may require additional setup |

| Ecosystem | Vast ecosystem with numerous tools and libraries | Growing ecosystem, particularly strong in microservices architectures |

The 'Why' Behind Uber's Migration

Based on the results shared in the the talk, the engineers wanted to do this migration to prove that gRPC would bring in substantial benefits to around payload size and latency due to the advantages listed above.

Server Side Costs

For the business impact, I believe cost savings via bandwidth reduction and lower resources usage could have been a solid argument. At Uber's scale, these savings could be huge on the server side.

Better Developer Experience

The binary format usually would force you to strongly type your data models and have a contract-first approach to the APIs. Code-gen for the networking layer becomes more of a necessity to invest in rather than a good-to-have from the get go.

While gRPC also offers in-built support for multiplexing, bi-directional streaming, among other things. I don't how and if at all these features were actively citied with a clear beneficial use case for this test.

Client Side Improvements

gRPC is naturally better in terms of performance because it is faster to serialize and de-serialize the data in binary format over other text based formats like JSON or XML.

Lower internet data usage for users, especially for Uber where (I'd assume) a lot of users would be making bookings using their mobile data, this could be a huge cost saver. Also lower resource consumption of the network radio leading to a drop in battery usage.

Prerequisites for Migration

The engineers did a great job at highlighting some of the prerequisites they established for the migration.

- Network latency should show a significant reduction.

- Service load could not increase by more than 2%

- Zero downtime for business.

Why do these prerequisites matter and how they impact decision making?

As an advocate for experimentation in software development, I believe establishing prerequisites or a strong hypothesis is crucial before starting any project. This approach helps define the problem's constraints, allowing you to focus on your primary goal without distractions.

Engineers, being naturally creative, might be tempted to address every possible edge case or related issue. However, this can complicate the project and make it harder to ship. Moreover, it can muddy the experimental data, making it difficult to identify which changes are truly impacting your metrics.

Last year, I had the opportunity to discuss some of these concepts on the Fragmented podcast with hosts Donn and Kaushik, in an episode called 'Feature Flags & A/B Testing: A Deep Dive with Ishan Khanna'.

In Uber's case, their engineers wisely set clear prerequisites for their gRPC migration:

- Significant reduction in network latency: This implies they established a baseline metric to measure success. Without this, it would be challenging to justify the effort and resources invested in the migration.

- Limited increase in service load: By setting a hard limit of 2% increase, they ensured the migration wouldn't negatively impact their infrastructure.

- No downtime for the business: This constraint prevented potential revenue loss during the migration process.

These prerequisites demonstrate the importance of setting clear, measurable goals in large-scale projects. They help in:

- Justifying the resources and time invested

- Maintaining focus on the primary objectives

- Ensuring the project delivers tangible benefits

- Preventing unintended negative impacts on the business

By establishing these constraints, Uber's team could confidently proceed with their migration, knowing they had clear criteria for success and safeguards against potential pitfalls.

Dissecting the Three-Phase Strategy

Pre-Flight Phase: The Art of Shadow Calls

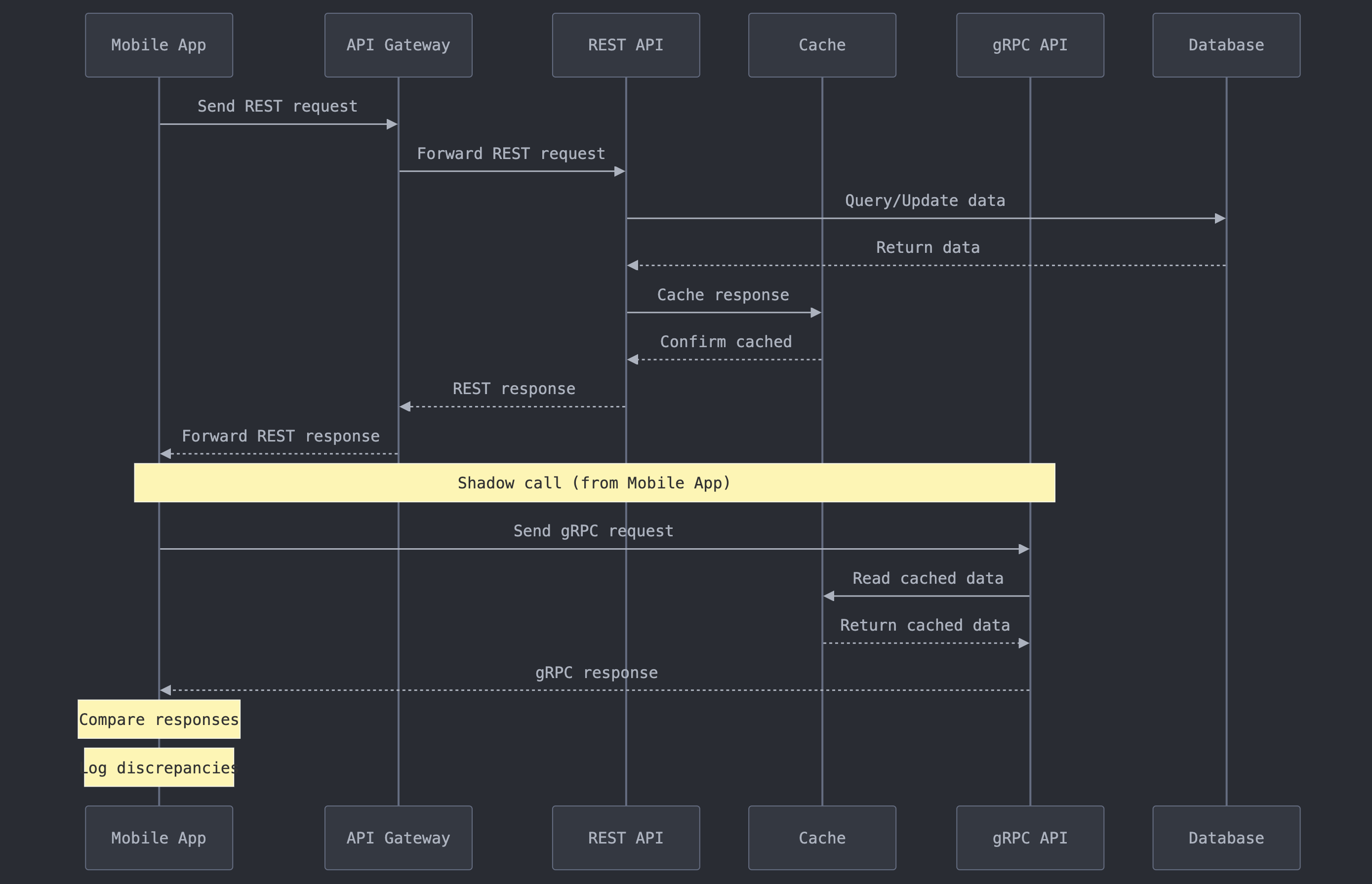

The pre-flight phase of Uber's gRPC migration strategy employed a clever technique called "shadow calls."

These background requests, made alongside the main API calls, allowed the team to validate the response data and ensure consistency without adding significant load to the infrastructure. By closely monitoring key metrics like payload size and round-trip latency, the Uber engineers could confidently assess the performance benefits of the gRPC migration before rolling it out more broadly.

Now the above diagram illustrates how shadow calls are performed. Let's see how the latency would be calculated and compared between the two stacks.

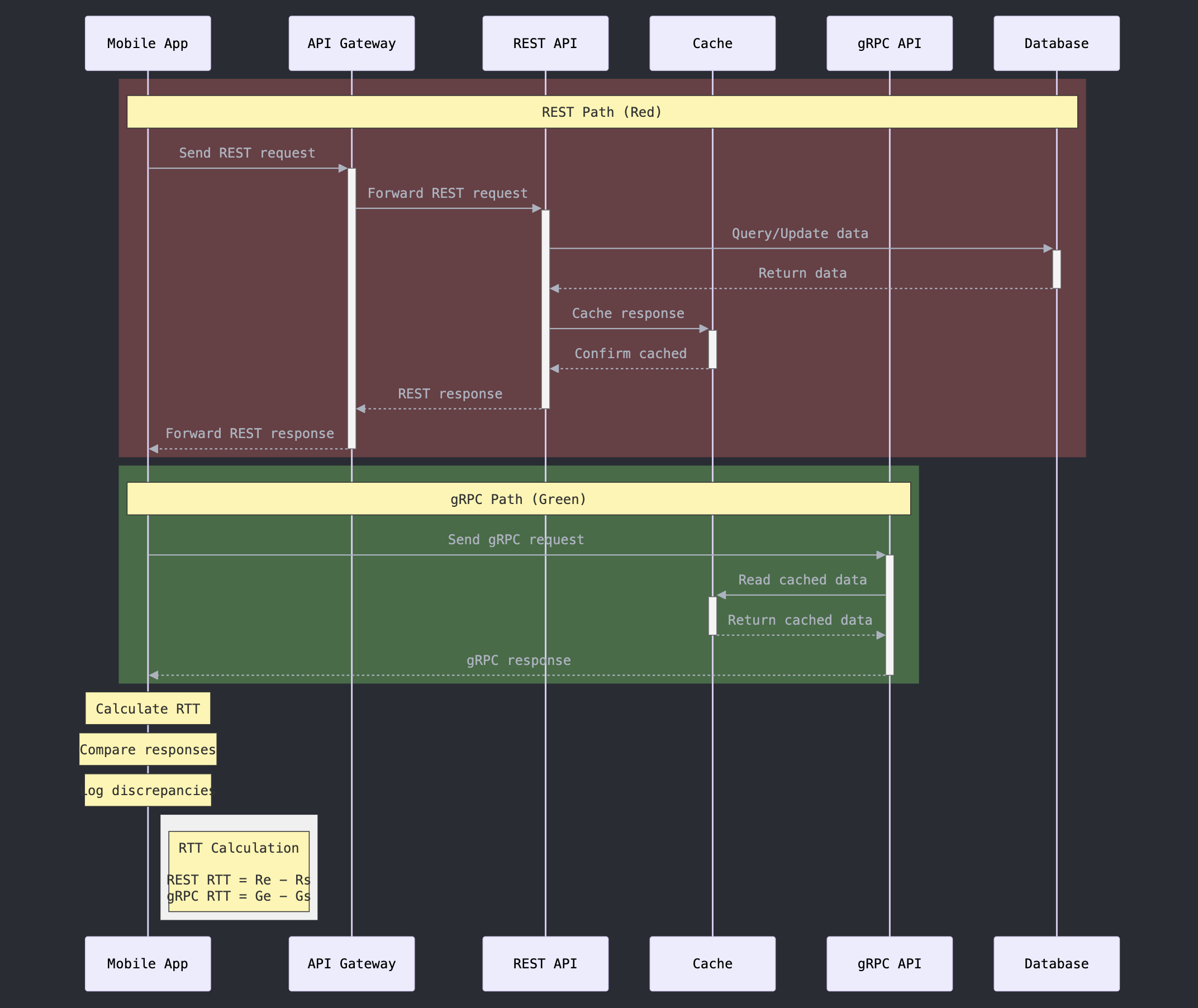

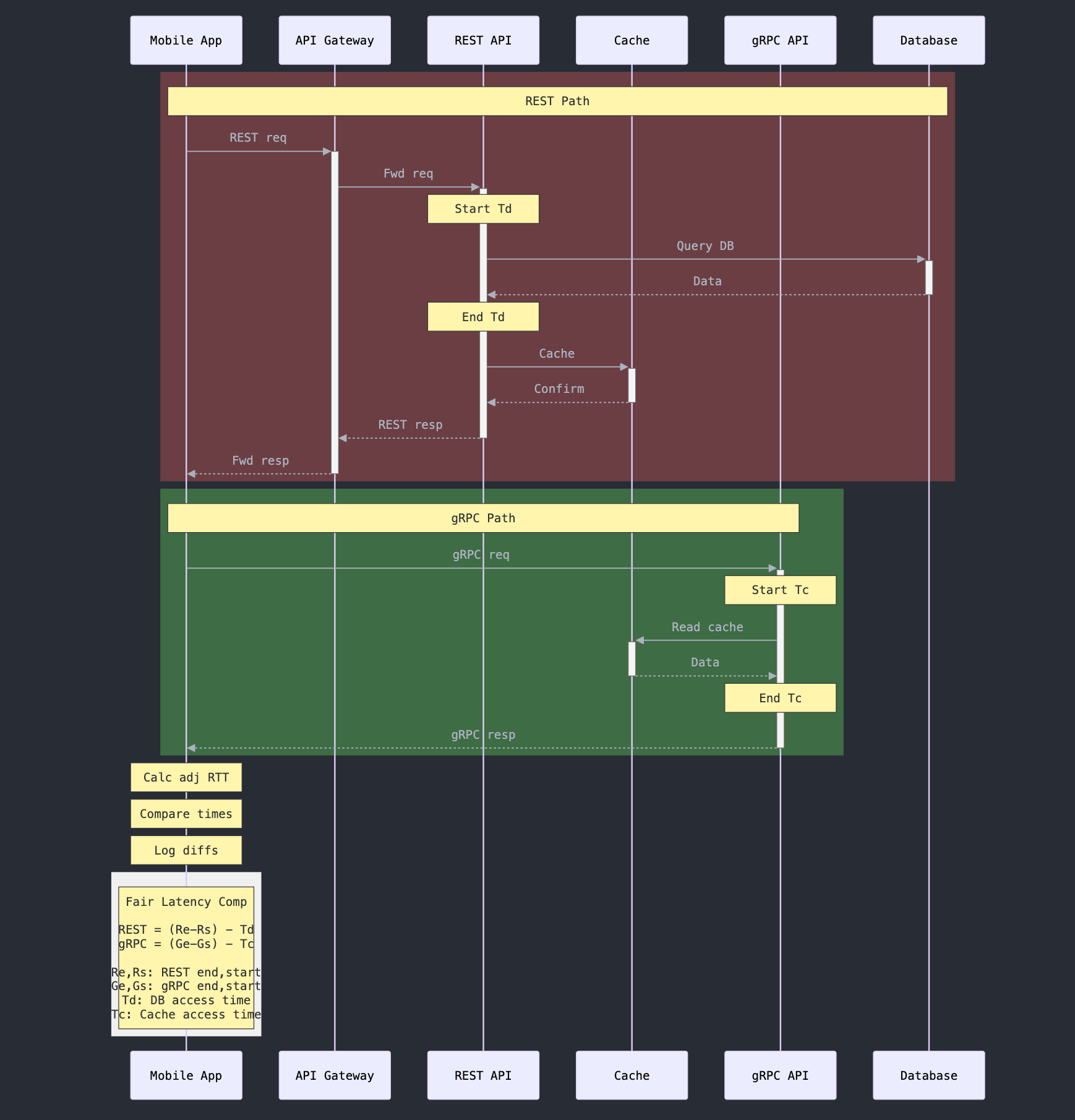

The path in Red above shows the round trip time (RTT) for REST path and Green shows the RTT for gRPC.

However, there is an issue as we cannot do a fair comparison of these two RTTs. The reason is that gRPC call never hits the database and may not be doing the extra work that the original REST call is subject to. It only reads the response, generated by the original call stored in the cache.

So in order to do a fair comparison, we must do the following:

- For REST RTT, we are going to subtract the time it takes to query the DB.

- For gRPC RTT, we are going to subtract the time it takes to query the cache.

When we do that, we end up with the following result, which gives us a much more fair comparison of the two Round Trip Times.

Although it seems very simple, it is a very powerful technique for validating ideas at scale extremely quickly with low cost and so I believe all engineers should know about this technique.

In-Flight Phase : Navigating the Rollout Minefield

Migrating the very API that controlled Uber's experimentation data posed a unique challenge for the engineering team.

If there was a malfunction in this critical communication channel, rolling back or killing the new gRPC-powered API would become a nightmare. The backend would be unable to communicate with the clients that had already been bucketed into the faulty logic, leaving the company in a precarious position.

To address this, the team implemented a robust client-side safety net using a combination of randomization and local rollback intelligence.

When a client requested the new Experimentation API, a simple coin flip determined whether they would be routed to the updated gRPC service or the legacy REST API. However, the team didn't stop there.

They also incorporated a circuit breaker mechanism that monitored for errors.

If the error count exceeded a predefined threshold of three, the client would automatically fall back to the old API, ensuring a seamless experience for users and preventing cascading failures in the system.

This approach allowed Uber to gradually migrate their Experimentation API to the new gRPC architecture while maintaining complete control and the ability to quickly roll back if any issues arose.

Post-Flight: The Cleanup Conundrum

The deprecation of legacy APIs and services is a common challenge that software engineers often face, but it's one that is frequently underestimated in its complexity.

Especially relevant for mobile apps, as you'll never have 100% of the customers using the newest versions of your app, adoption acceleration tends to zero as you hit 75-80% adoption of a given version as I discuss in post about working with in-app updates API from Google.

It is situations like these where you have to make an informed guesstimate about how you are going to cut-off support for a given version. As per the Uber engineers they came up with the following two criteria:

- Budget based cut-off, for example when 99.99% traffic moves to the new API just sunset the old service and let it error out.

- Force In-App upgrades on the client beyond a certain version

It does become a core need to take these somewhat extreme measures at times when the cost of supporting these users or versions is higher than the actual revenue these users generate for the business.

Overcoming Challenges in the Transition to gRPC

While Uber's migration to gRPC offered significant benefits in payload size and latency reduction, the journey was not without its fair share of challenges. As the engineering team navigated the complexities of this transition, they encountered several obstacles that required innovative solutions.

Dynamic Field Validation

One of the primary hurdles was the handling of dynamic fields in the response payloads. These properties would change rapidly between requests, making it challenging to validate them consistently. To address this issue, the team made a strategic decision to consciously exclude these dynamic fields from their testing, prioritizing a pragmatic approach over rigorous validation.

Differences in Cloud Providers

Another challenge arose from the varying levels of gRPC support across different cloud providers. Certain cloud platforms had out-of-the-box capabilities for gRPC communication, while others required the team to invest additional efforts in building custom support. This disparity in capabilities added an extra layer of complexity to the migration process, underscoring the importance of thorough due diligence and cross-cloud compatibility considerations.

API behaviors of gRPC and OkHttp Clients

Additionally, the team encountered discrepancies in the API behaviors between the legacy OkHttp client and the new gRPC client. For instance, while OkHttp allowed for the configuration of connection, read, and write socket timeouts, gRPC operated with a different concept known as "Deadlines." The engineers had to develop a mapping mechanism to ensure a smooth transition, acknowledging that such translations are inherently error-prone and may not always provide a perfect one-to-one correspondence.

These challenges highlight the importance of a well-planned and agile migration strategy, where the engineering team must anticipate and address a range of technical complexities to ensure a successful transition to the new technology stack.

Beyond the Numbers: Interpreting the Results

As per their engineers the experiment resulted in 45% payload reduction and 27% latency improvement with the gRPC stack.

These enhancements have a ripple effect throughout the mobile app ecosystem, optimizing resource utilization, enhancing user experiences, and strengthening Uber's competitive edge.

By reducing payload sizes, Uber minimizes data transmission costs and elevates network efficiency - critical for mobile apps operating at global scale. The latency improvements, in turn, translate to snappier, more responsive app interactions, leaving customers with a smoother, more delightful experience.

Ultimately, Uber's attempt at a successful gRPC migration serves as a powerful case study, showcasing how strategic infrastructure investments can yield tangible, high-impact results.

Conclusion

So there you have it - Uber's wild ride from REST to gRPC. Who knew API migrations could be this exciting? From shadow calls to coin flips, the team pulled out all the stops to make this transition smooth as silk. The payoff? Faster apps, happier users, and some pretty impressive numbers to brag about at tech conferences.

But beyond the stats, this story reminds us why sharing these behind-the-scenes tech adventures matters. It's not just about Uber - it's about learning from each other, pushing boundaries, and maybe inspiring the next big tech leap. Whether you're a coding wizard or just someone who uses apps (so, everyone), these improvements ripple out to make our digital lives a little bit better.

Next time you're waiting for your Uber, spare a thought for the unsung heroes who made your app just that much snappier. And hey, maybe it'll inspire you to tackle that daunting project you've been putting off. After all, if Uber can flip their entire API system without anyone noticing, who knows what you could pull off?

Enjoyed this tech tale? Share it with your fellow code crusaders and app enthusiasts!